Fighting Human Hubris: Intelligence in Nonhuman Animals and Artefacts

Christian Hugo Hoffmann

Institute for Technology Assessment and Systems Analysis (ITAS), Karlsruhe Institute of Technology (KIT), Germany

christian@hoffmann-economics.com

www.christian-hugo-hoffmann.com

Download a PDF of this article

Abstract: 100 years ago, the editors of the Journal of Educational Psychology conducted one of the most famous studies of experts’ conceptions of human intelligence. This was reason enough to prompt the question where we stand today with making sense of “intelligence”. In this paper, we argue that we should overcome our anthropocentrism and appreciate the wonders of intelligence in nonhuman and nonbiological animals instead. For that reason, we study two cases of octopus intelligence and intelligence in machine learning systems to embrace the notion of intelligence as a non-unitary faculty with pluralistic forms. Furthermore, we derive lessons for advancing our human self-understanding.

Keywords: Intelligence; multiple intelligences; octopi; machine learning; pluralism

Introduction

A zoologist from Outer Space would immediately classify us as just a third species of chimpanzee, along with the pygmy chimp of Zaire and the common chimp of the rest of tropical Africa. Molecular genetic studies … have shown that we … share over 98 percent of our genetic program with the other two chimps.

Jared Diamond, 1992: 2

Our crude human chauvinism gave cause for dispute and ridicule early on. La Mettrie (1748/1990: 124) wrote in his magnum opus L’Homme-Machine, for example:

Car c’est elle, c’est cette forte Analogie, qui force tous les Savants et les vrais juges d’avouër que ces êtres fiers et vains, plus distingués par leur orgueil, que par le nom d’Hommes, quelque envie qu’ils aient de s’élever, ne sont au fond que des Animaux, et des Machines perpendiculairement rampantes.1

And indeed, our species-centric view of intelligence may be self-serving, but as a general characteristic intelligence correlates only superficially (and perhaps even negatively) with most measures of evolutionary success. This becomes transparent when we acknowledge the tension between individual humans and some groups of humans (such as distinguished scientists standing on the shoulders of giants) being the most intelligent beings we know of while humans, en masse, appear to be self-destructive, which ranges from anthropological climate change to nuclear wars. Why is this relevant and challenging for the notion of intelligence? Because “[a]lmost all definitions of intelligence, for all their disagreements, agree on one thing – that intelligence crucially involves the ability to adapt to the environment” (Sternberg, 2019: 1). And we share this understanding of “intelligence”, and accept this common denominator in this article.

Some species have been around for much longer than homo sapiens and given the numerous examples of maladaptive human behavior, it is likely that the so-called wise human (homo sapiens) will never become the most successful species in evolutionary terms.2 Still, our experts on intelligence, i.e., psychologists, consider the ability to solve a number-series problem (which can be measured in their IQ tests) a better indicant of “intelligence” than the ability to think of ways to mitigate worldwide disaster (Sternberg, 2019: 10).

In this paper, we argue that it is time to broaden the scope of what we deem intelligent and that we should acknowledge other kinds of intelligence, not least to derive lessons to become a more successful species. This is accomplished by studying the case of octopus intelligence in the next section, as well as the case of intelligence in systems of Artificial Intelligence (AI) of the current machine learning paradigm thereafter.

This paper at hand represents a teaser of the more comprehensive study by Hoffmann (2022a), and probably offers no new single results to the versed reader, but rather conducts a literature review of two previously poorly connected case studies taken from biology, on the one hand, and computer science, on the other. The hope is though that we bring forward an informative and thought-provoking argument in favor of abandoning an anthropocentric view of intelligence since in the light of octopi and, even more, machine learning or AI systems, which this author ascribes some intelligence to, but which share so little with us humans, it (an anthropocentric view of intelligence) is simply not justified. Or, put differently, we should broaden our perspective on what constitutes intelligence.

Lessons on grasping “intelligence” from studying octopi

In this case study of animal intelligence, we dive into the heterogeneity in evolutionary trajectories by acquainting ourselves with species at another, distant end of the tree of life. The travel down the branches to meet the last (in the sense of most recent) common ancestor of mammals (like apes or us) and birds (like crows that have also been reported to be very smart, Jelbert et al., 2019) is only half as long as the step back in time we would need to take to encounter the common ancestor that connects us to cephalopods, a group of animals which includes octopi, cuttlefish, and squid among others. The latter departure happened about 600 million years ago (Godfrey-Smith, 2018: 5), i.e., at a time long before the age of the dinosaurs when no flora and fauna had made it onto land yet and the largest animals around our common ancestor with cephalopods, something like small, flattened worms, might have been sponges and jellyfish. And while human species have populated the Earth for less than three million years, the oldest possible octopus fossil (that do not preserve well) dates from 330 million years ago and another less ambiguous case from ca. 240 million years ago (The Guardian, 2022).3 Paradoxically to many, cephalopods are much more akin to “simple” beings like clams and snails (since they all belong to the subgroup of mollusks) or invertebrates like ants and termites, which do not have many neurons (e.g., ants have approximately 250,000 neurons, from which it does not follow that they would not be intelligent, cf. Huebner, 2018); and nonetheless we have good reason to present them as a vigorous and outstanding case of animal intelligence.

Due to their large and complex nervous systems, octopi, cuttlefish, and squid can count as an island of mental wealth in the sea of invertebrate animals. Since our most recent common ancestor was so primitive and lies so far back,

cephalopods are an independent experiment in the evolution of large brains and complex behavior. If we can make contact with cephalopods as sentient beings, it is not because of a shared history, not because of kinship, but because evolution built minds twice over. This is probably the closest we will come to meeting an intelligent alien. (Godfrey-Smith, 2018: 9).

Two best-selling books in recent years, one we were just quoting from and the other one being titled “The Soul of an Octopus: A Surprising Exploration into the Wonder of Consciousness” by Montgomery (2016), have drawn a broader audience’s attention to animals that not only possess extraordinary physical traits – e.g., an octopus has eight arms, three hearts and blue copper-rich blood (Scales, 2020) – but that moreover captivate us due to their cognitive and behavioral abilities. The broader audience encompasses scientists outside of biology or comparative psychology, animal lovers, curious laypeople, yet also concretely philosophers who have brooded over questions about other minds, and the minds of cephalopods are probably the most other of all. Therefore, our outline relies more on Godfrey-Smith’s (2018) more philosophical account than on Montgomery’s (2016) book.

At first glance, it might astonish that cephalopods, especially octopi, were equipped with high intelligence because in the literature on intelligence and animal intelligence it is usually brought to the fore the factor of complex social life as a key driver for intelligence, in the sense that the mind evolved in response to other minds. This obviously holds for humans (Aristotle’s Zoon Politikon), but is further confirmed by other apes and cetaceans too that live in groups (Humphrey, 1976; Dunbar, 1998; Fox et al., 2017). By contrast, octopi are not very social creatures (this holds less for cuttlefish, and squid). They live, hide, hunt by themselves, have usually a short lifespan of less than five years, and the females die once their offspring hatch from the eggs, with the consequence that the offspring are thrown back on themselves to figure out how their body and their world works. They have little to no parental care and as such are forced to learn rapidly.

At secunda facie, however, we notice that a major shift in the evolution of cephalopod bodies happened when sometime before the era of the dinosaurs (The Guardian, 2022) some of them began to reduce and internalize (squid and cuttlefish) or entirely abandon (octopus) the protective casings or shells that we can still find today in their relatives, the nautilus. This mutation enabled more freedom of movement and flexibility, but at the price of ever-proliferating vulnerability towards predators. Here, we are getting our hands on a hint to resolve the puzzle of octopus intelligence: since the nucleus of intelligence lies in the ability to adapt to the environment (see above as well as Sternberg, 2019: 1), more of this ability is required from octopi when they become more vulnerable towards predators over time.

On top of that, recall Hobbes’ proverb Homo homini lupus est. Here we (humans) are warned not to trust ourselves to an unknown person, but to beware of him or her as of a wolf. This insight can explain why humans with their complex social life have developed a rich cumulative culture from the state of law to new technologies such as blockchain (which does not presuppose trust between the people involved in a blockchain-based system; Nakamoto, 2008). In this respect, we may view octopi as social too, both literally (they are cannibalistic and sometimes fight other octopi) and figuratively: they interact and engage with other beings when they rove and hunt or are exposed as prey.4 Those situations are “social” too in a way (even though this statement is not in line with how the social brain hypothesis views sociality; i.e., that it is complex social bonds and not volume of interactions that require intelligence; cf. Dunbar, 1998; Humphrey, 1976), as octopi often require that an animal’s actions are tuned to the actions and perspectives of others. Notwithstanding, one might be entitled to call octopi a non-social form of intelligence (Godfrey-Smith, 2018: 65).

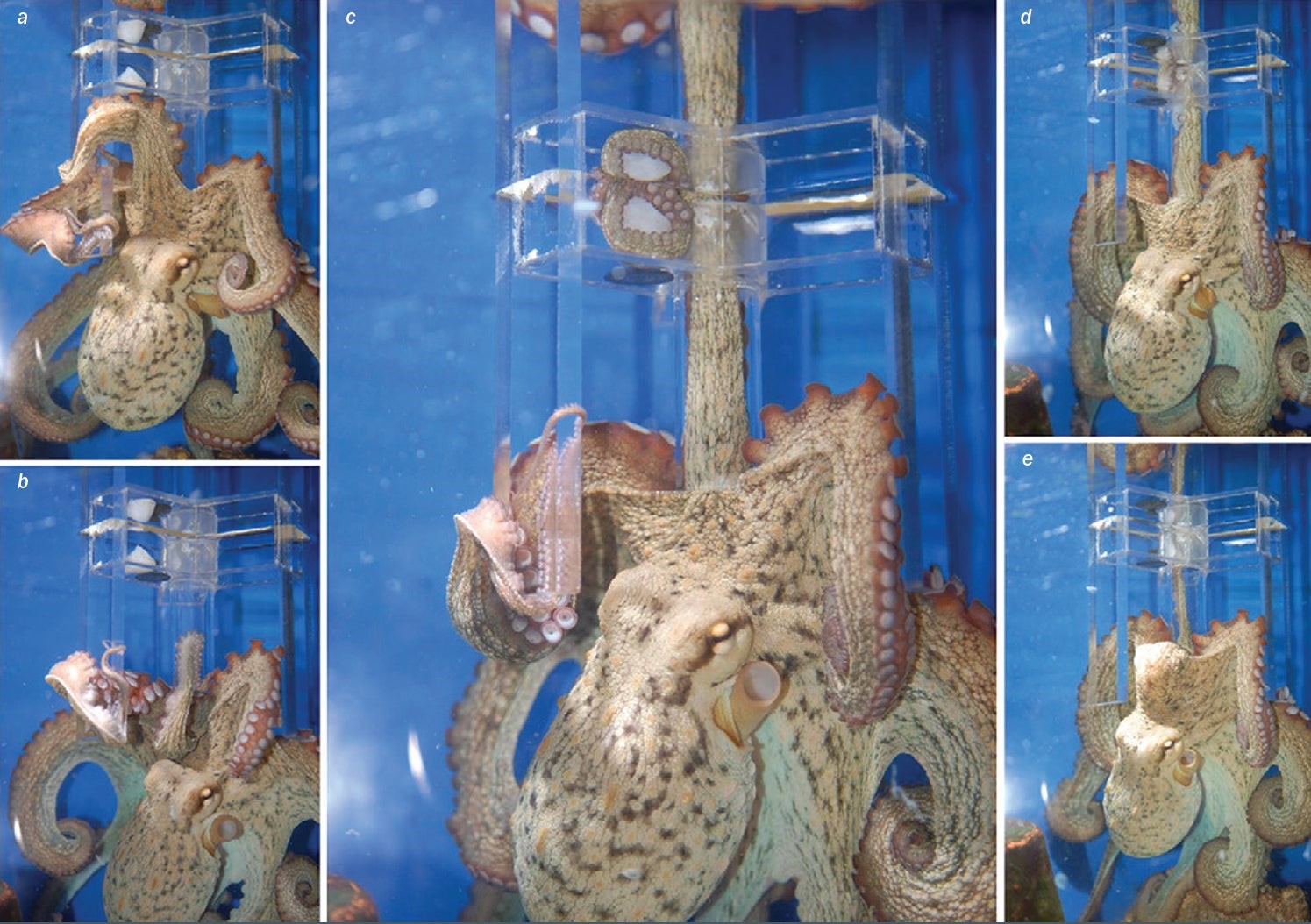

What is now special about octopus or cephalopod intelligence? Octopi and other cephalopods have exceptionally good eyes; however, these eyes are built on the same general design as ours (Godfrey-Smith, 2018: 51) and we are not tempted to subsume this feature under the term “intelligence” anyways, just as a dog has a remarkable sense of smell but that is not intelligence. The large nervous system of cephalopods does not make them special either; that they share with mammals in general. Nor can it be their attested brain power which they, to some degree, have in common with birds like crows or parrots (Godfrey-Smith, 2018: 50; Olkowicz et al., 2016). But how about that there is no part-by-part correspondence between the parts of their brains and ours?5 Indeed, an octopus manifests an individual that is characterized by decentralized intelligence (see Figure 1) as the bulk of their neurons are not collected inside their brains; most of their nerve cells are found in their arms (ibid.). As a consequence, the arms have their own controllers and sensors, not only sensing touch, but also chemicals. The question how an octopus’ brain relates to her arms is a fascinating one and it has been suggested that the arms seem “curiously divorced” from the brain, at least in the control of basic motions (Hanlon & Messenger, 2018).

Octopi and cuttlefish make colors, and are thereby immensely expressive animals, animals with a lot to “say” which bursts the cramped confines of biological or evolutionary functions like signaling or camouflage. The skin of a cephalopod is a layered screen controlled directly by the brain. Neurons reach from the brain through the body into the skin, where they control muscles, which, in turn, control millions of pixel-like sacs of color (Godfrey-Smith, 2018: 109). If the animal senses or decides something, her color changes in an instant. Given all these idiosyncracies of the octopus, the route to work out how smart they are is to look at what they can do.

When tested in the lab, octopi (on which there is more research than on squid or cuttlefish) have done fairly well, without showing themselves to be Einsteins though (Godfrey-Smith, 2018: 52). They can learn to navigate simple mazes (Godfrey-Smith, 2017; Boal et al., 2000), unscrew jars to obtain the food inside (Richter et al., 2016; Anderson & Mather, 2010), etc., but they are rather slow learners in all these contexts, and experimental results are mixed (Godfrey-Smith, 2018: 52). For example, the well cited study of Octopi opening a bottle to capture a food source is fraught with difficulty; not the least of which is that the experiment only worked if the animal had experience opening containers (Fiorito et al., 1998; Fiorito et al. 1990; cf. also Abramson & Wells, 2018, for the broader context of invertebrates).

However, those observations from human-made settings (like in Figure 1) fit poorly with their behavior in other scenarios as well as their ability to adapt to new, unusual circumstances and to turn the lab paraphernalia around them to their own octopodean purposes. For instance, some octopi in confinement seem to figure out quite quickly, i.e., after a few minutes only, that they can put out the bright lights in an aquarium (which they are said to dislike) by squirting jets of water at them (cf. Bradley, 1974; Browning, 2019). This apparent mismatch may have its roots in a failure of experiments to tap into octopus motivation, which is known as one of many limitations of testing procedures for animal intelligence as Hoffmann (2022a: 49) shows.

Break-out session

Nine common limitations of testing procedures for animal intelligence

- Unnoticed impingement on or manipulation of evaluee by the measurement procedure

- Flawed interpretations of test results: from anthropomorphizing animals (e.g., the case of Clever Hans and math, whales and music, etc.; cf. Sebeok, 1981)

- to underestimating hallmarks of animal intelligence (body language of horses, “communication” skills of whales or decentralized sensing capacities of octopi, etc.; cf., e.g., Noad et al., 2000)

- Tightly controlled scientific work may fall short of accounting for idiosyncracies, having been undertaken under the assumption that all animals of a given species (and perhaps of a given sex) will be very similar until they encounter different circumstances or rewards. A species of a great deal of individual variability are octopuses (Semmens et al., 2004).

- Pitfall of test administration: This starts with getting the animal motivated to solve a task or inducing it to display some behavior, and goes on with a step-by-step arrangement of picking the right rewards and stimuli, including other steps or caveats too: How to prevent cheating by the animal? Does the animal want to “communicate” something else to the tester? Maybe, animals follow their own agenda and/or have their own ideas. Or to put it in simple terms, the intelligence evaluation setup has to be intelligent itself.

- Such issues are not different, in principle, to the administration error that happens in human evaluation, but for non-human animals the choice of the interface plays a more crucial role.

- Neglect of emotions in intelligence tests.

- Limitations of comparing features and behaviors with respect to intelligence across different species in different habitats or niches.

- Even slight changes to the task can alter performance, not only across species, but also within a species (e.g., see the six different experimental setups for Caledonian crows by Jelbert et al., 2014)

Figure 1: An octopus’ arm can taste, touch and move without oversight from the brain. To test if the brain also has centralized, top-down control over the limbs, scientists designed a transparent maze. To reach a treat in the upper left compartment (a and b), the animals had to send an arm out of the water (c), losing guidance from their chemical sensors. They then had to rely on their eyes to direct the arm (d). Most succeeded (e). Source: Godfrey-Smith, 2017.

In some analogy to what was observed with corvids (Jelbert et al., 2019, 2014), it has been furthermore argued for octopi too that they possess higher-order capabilities such as being aware of captivity (unlike fish, for example, that seem to not have the faintest idea that they are in a tank; Stefan Linquist in Godfrey-Smith, 2018: 56). What the latter sees as the distinctive feature of octopus intelligence is their so-called coconut-house behavior, a form of tool use, which illustrates the way they have become smart animals, displaying flexible behavior, creativity and adaptation to their environment (again linking back to Sternberg’s, 2019, notion of adaptive intelligence): Soft-sediment dwelling octopuses carrying around coconut shell halves have been repeatedly observed, assembling them as a shelter only when needed. Whilst being carried, the shells offer no protection and place a requirement on the carrier to use a novel and cumbersome form of locomotion — ‘stilt-walking’ (cf. Finn et al., 2009; Godfrey-Smith, 2013).

Octopi are smart in the sense of being curious and flexible;6 they are adventurous and opportunistic. This is so noteworthy in the example of their coconut-house behavior because to assemble and disassemble a “compound” object like this, and put it to use, is very rare in the animal kingdom (Godfrey-Smith, 2013).

We would add here two more things about the signature of an octopus’ intelligence. On the one hand, as we move up on the tree of life from its dawn to the present day or across the phylogenetic scale, respectively,7 we realize that the behavior of an animal is shaped, not primarily by its genes, yet, in larger and larger measure, by the contingencies that coin the habitat in which the animal lives. In the same breath, however, a wobbly conclusion is sometimes drawn which at least partially collapses in the light of the learnings from this case study of animal intelligence. Staddon (1983: 395), for instance, writes:

Most animals are small and do not live long […]. A small, brief animal has little reason [in the teleological sense, C.H.] to evolve much learning ability. Because it is small, it can have little of the complex neural apparatus needed; because it is short-lived, it has little time to exploit what it learns. Life is a tradeoff between spending time and energy learning new things, and exploiting things already known. The longer an animal’s life span, and the more varied its niche, the more worthwhile it is to spend time learning. It is no surprise, therefore, that learning plays a rather small part in the lives of most animals.

Two comments on this fragment must suffice: The subsequent section of this paper at hand calls into question that small bodies and no or only a little web of neurons do not go along with intelligence. The case of octopus intelligence teaches, moreover, that the neural network can be decentralized. Secondly, although octopi are not considered small animals compared to flies, fleas, bugs, nematodes, etc. that comprise most of the fauna of the planet, they have strikingly short lives among the animals of heightened intelligence, thus falsifying the other assertion about the alleged correlation between lifespan and intelligence (Amodio et al., 2019). Summa summarum, much remains for us to be learned both about the wonders of intelligence in our world as well as about the intimate relationship between intelligence and (causal) learning (which is the subject matter of Hoffmann, 2022a).

On the other hand and in view of our stance for non-monolithic intelligence, we would elaborate on octopi’ high bodily-kinesthetic capabilities as another pinnacle of their intelligence. The octopus’ loss of almost all hard parts (beside of their eyes and their beak basically) compounded both the threats and the opportunities. A tremendous range of movements became possible, but they had to be orchestrated, had to be made coherent to sort out the problem of coordination. Combined with the requirements posed by their milieu (vulnerable hunting, finding shelter, etc.), their physical traits translate into the very adept use of their body: The same animal can camouflage perfectly, it can stand tall on her arms, squeeze through a hole little bigger than her eye (they also seem to know if they fit into the hole prior to testing it; Godfrey-Smith, 2017), morph into a streamlined missile, or fold herself to get into a jar.

In nuce, an octopus’ body is not divided from her brain; it is protean, is all possibility and in this sense disembodied. It is suffused with nervousness and lives outside the usual body/brain divide. An animal which is often (especially the first generations) disembodied in the full sense of being immaterial or without any body is the artificial animal, better known as AI. (It would be more precise to say that AI systems are medium independent, not immaterial: “A concrete system is medium independent if what it is does not depend on what physical ‘medium’ it is made of or implemented in. Of course, it has to be implemented in something; and, moreover, that something has to support whatever structure or form is necessary for the kind of system in question.” Haugeland, 1997: 10f.).

Why would anybody think that an “animal” without being embodied in the world is able to think? It goes without saying “that, in general, intelligent systems ought to be able to act intelligently “in” the world. That’s what intelligence is for, ultimately.” (ibid.: 25). To complement this review of non-human intelligence, this is what we look at in the following section and case study.

Lessons on grasping “intelligence” from studying AI and machine learning systems

Pillars of human exceptionalism such as, most saliently, causal reasoning and tool use have been falling (e.g., for crows, cf. Jelbert et al., 2019; or for apes, cf. Köhler, 1925/1976). This certainly puts flesh on the bone for appreciating the diversity and fascination of intelligent life forms. But why should we cease with the avoidance of any kind of anthropocentrism? Would it not make sense now to also leave biocentrism behind to prevent objections on a still arbitrarily myopic scope of intelligence? Classical frontiers between the biological or natural and artificial domains can vanish, once we view computer science as being not so much about technical machines or black boxes; what computer science, by contrast, is mostly about is computation, which is happening within the machine (Levesque, 2018).

When in the 1960s and 1970s AI was in its first flush, the newly minted AI labs were electrified about the seemingly palpable prospect of developing true machine intelligence, but defeated by the complexity and uncertainty encountered in the real world. Since a few decades, there has been excitement once again – for “second wave” AI, for a new “AI spring”. Deep learning programs such as AlphaGo8 which became in 2015 the first computer Go program to “beat” a human professional Go player (Silver & Hassabis, 2016) and affiliated statistical methods, backed by unimagined computational power and reams of Big Data, are “accomplishing” levels of performance which trounce the achievements of the earlier phase. In fact, it has become rather routine to see machines doing things that seemed far off even twenty years ago, such as making a haircut appointment by talking to a real human person. Or for us, sitting in a car that can drive itself on ordinary (not too busy) highways (even though to be fair, Daimler’s twin robot vehicles VaMP and Vita-21 drove more than 1,000 km on a Paris three-lane highway in standard heavy traffic at speeds up to 130 km/h as early as in 1994, albeit without the company realizing the market potential and merely semi-autonomously with human interventions, i.e., still far from level-five autonomy where “autonomy” is a gradual concept that comes at five stages9).

With such examples of AI accomplishments at hand, it is not necessary to look ahead to the uncertain future, to when “the singularity is [said to be] near” (Kurzweil, 2006) to encounter intelligence in artifacts. Following the well-tested Darwinian perspective of gradualism, the notion of intelligence comes in different stages. Especially with regards to AI of the currently predominating machine learning paradigm, one can group the myriad of incumbent machine learning systems along the application to the broad field of predictions (particularly where the requirements of data affluence and sufficient regularity are fulfilled). This inspired Agrawal et al. (2018) to name their book as well as AI nowadays “Prediction machines” with the telling subtitle being “The simple economics of Artificial Intelligence”. In it, they show that AI is actually flying a false flag. But unlike many explicit AI statements, where intelligence was abandoned in favor of task performance,10 the authors argue that AI is admittedly not about intelligence as a whole, but only about one – albeit essential – component thereof: the faculty to “predict” (which can be applied, for instance, in cancer research)11. The ability to predict does not have to be forward-looking, and, thus, the three authors grasp it simply as the use of information that we already possess, in short, data, to obtain information that we do not yet possess. For example, if certain blood samples, measurements or symptoms of a patient are available as data input, then it can be “predicted” that a tumor is malignant. The main take-away from Agrawal et al.‘s book for our paper is that intelligence lies in finding out, to some degree autonomously,12 what will be, or in detecting associations; higher levels of intelligence go beyond that, but go hand in hand with figuring out why a prediction holds true or why a certain correlation exists.

Nota bene that this author is not asserting that intelligence lies in every computer system which makes predictions. If a system’s capacity merely echoes the intelligence of another system, e.g., of the AI’s programmers, the first system’s capacity is thereby misleading as an indication of its intelligence (Block, 1981). However, at least the latest AI technology’s performances are no longer all echoes of humans behind the scenes (cf. e.g., Silver et al., 2017 or Tegmark, 2018: 88 for how these experts comment on the creative force of AlphaGo; and cf. Clark, 2016, who emphasizes on prediction (machines including the brain) in the study of mind).

The dynamism in thriving AI conveys the genuine or compelling reason for wondering if machines are or should be deemed intelligent. With some restrictions they might have been, by and large, shallow automata in the past, but contempt backfires when we emulate Agrawal et al. (2018) and Hoffmann (2018) who contend that much AI around us today has some intelligence; and it backfires even more, once more critical milestones towards general intelligence in AI engineering are reached.

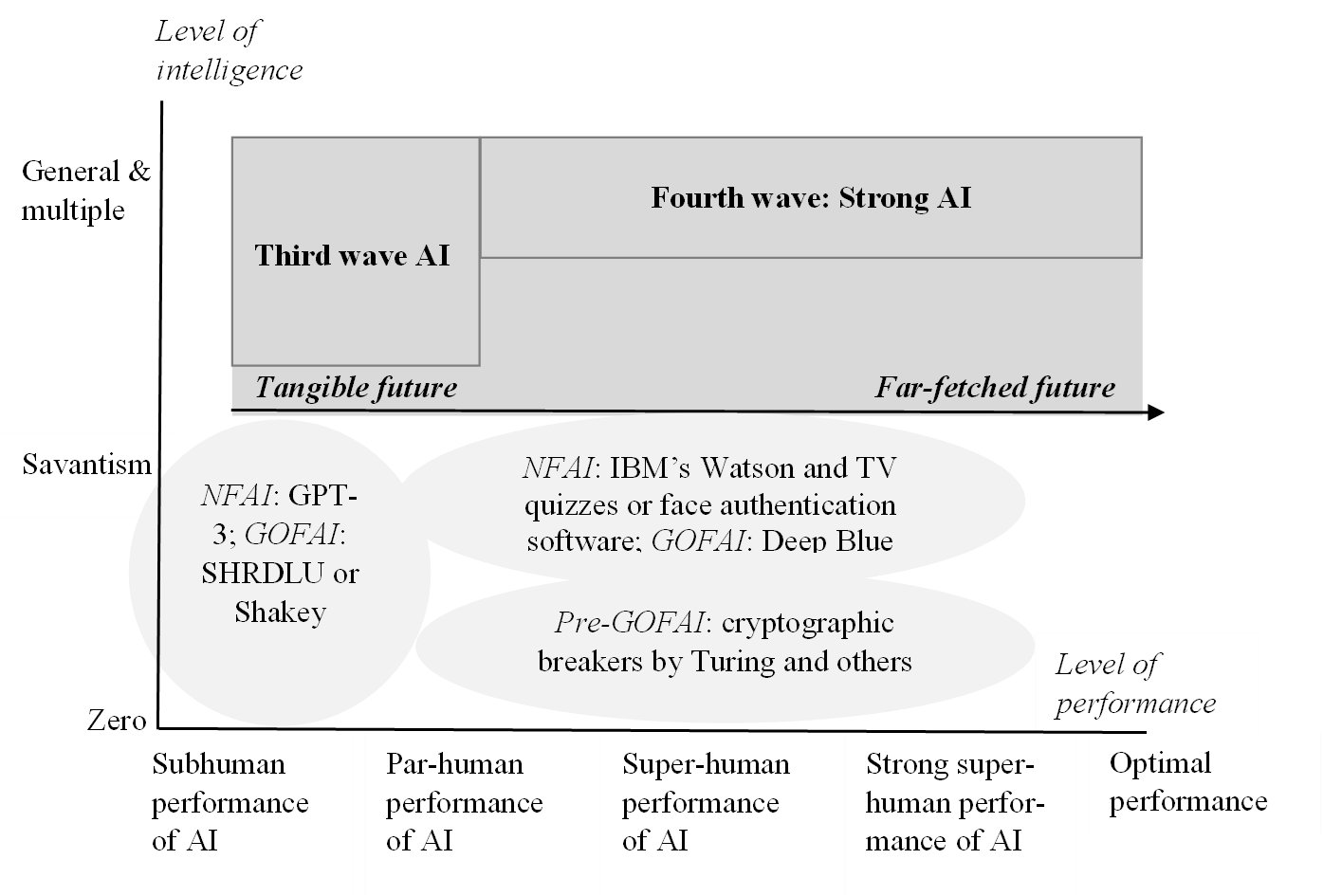

In this connection, we can distinguish four AI development stages and trajectories from Good-Old-Fashioned AI (GOFAI) systems of especially the 1960 and 1970s, to machine learning or New-Fangled AI (NFAI) since the last twenty to thirty years, to hypothetical AI systems of the third (general AI or AGI) and fourth wave (of humanlike AI). This differentiation is summarized graphically in the following Figure 2.

Figure 2: Four waves determine the AI universe. Prior to the Dartmouth conference in 1956, cryptographic breakers became superhuman (i.e., performing better than most humans), if not strong superhuman (i.e., performing better than all humans), in the 1940s with the aid of Turing who was the central force in continuing to solve the Nazis’ Enigma code in the UK, during World War II (Robinson, 2020). Some examples of GOFAI, like the NLP program SHRDLU (Winograd, 1972) or Shakey (Nilsson, 1984), which was a robot that could reason about colored blocks in its environment, as well as some instances of NFAI (New-Fangled AI), such as the program GPT-3 from the Guardian, are not only idiots savants, but also do worse than most humans for select tasks (a subhuman performance). Other examples of GOFAI like IBM’s Deep Blue (the chess world champion in 1995) or NFAI like IBM’s question-answering computer system Watson surpass humans in narrow contexts like chess or Jeopardy!, which makes AI undeniably a triumph, but today’s machine kingdom is crowded with blinkered specialists. Human AI experts are, therefore, paving the way for engineering competent generalists, which will herald the third era – a reasonable prospect. By contrast, the outlook to build strong or humanlike AI some day is more speculative or outlandish, depending on whom you ask (cf. Tegmark, 2018: 31). The five-stage scale on the x-axis was uttered by Rajani (2011).

Conclusion

100 years after the editors’ of the Journal of Educational Psychology survey about the meaning of “intelligence”, we have to confess that we have not made much progress in terms of extracting a single satisfying answer from incredibly rich debates on what intelligence is. However, we argued that this would be a misguided goal in light of the fact that intelligence is much more multidirectional than human intelligence(s) can account for.

We presented and discussed two case studies of octopus intelligence and intelligence in machine learning systems to back up the hypotheses that:

- the scope of the concept of intelligence is broad, encompassing humans, animals and machines to divergent degrees, and that

- intelligence manifests itself differently in different entities the concept applies to: hallmarks of intelligence vary between species / entity classes & individuals thereof.

What can we derive from these findings for advancing human self-understanding? We suggest the following points which ought to be spelled out by future research:

- Despite their incommensurability to at least some extent (e.g., the way they solve problems is often quite different), human, animal and AI intelligence share some common themes, such as the faculty to make predictions and identify relationships.

- Non-chauvinistic analyses of minds and mind-related properties are indicated, pillars of our exceptionalism such as, most saliently, causal reasoning, tool use or playing intellectually challenging games such as chess or Go have been falling.

- AI and animal minds can shed light on human minds by serving as a foil for comparison.

- In analogy to colors and systems of colors (Matthen, 2018), there may be many intelligences – indeed, many systems of intelligences – that humans never perceive.

- Rendez-vous with such intelligent “aliens” have happened, e.g., under the sea with octopi.

This paper’s main theme was that traditional views of cognition are inherently anthropocentric as well as that in order to fully understand and appreciate non-human intelligence, we must leave such an anthropocentric view behind. A(nother) way to get around the problem of intelligence could be to eliminate the term (along with many others): “it is best simply to get rid of the term ‘intelligence’ […], the ill-fated word from our scientific vocabulary” (Jensen, 1998: 49). This was the point of “radical behaviorism”. One might claim that, unlike the cognitivists, behaviorists are far from dead and are making a comeback (Abramson & Levin, 2021; Abramson et al., 2016; Abramson, 2013). Why do we need the term “intelligence” at all when mathematical models are used? One might wonder. The answer this paper suggested is to not concede victory to radical behaviorists while clarifying and acknowledging that the challenge posed by the notion of intelligence is indeed major as it covers a wide spectrum as well as many dimensions. We can learn from philosophy that concepts ought to be clarified and not eliminated (no matter the size of the conceptual problem); the latter would only mean to watch our intellectual abilities (that equip us to master such challenges) vanish sooner or later. Moreover, the lesson from the case study of AI in fact teaches us to not become overly impressed by mathematics and mathematical models since many aspects such as causal reasoning or common sense are currently not well-covered. For more profound information, the interested reader is referred to Hoffmann (2022a).

Notes

1 In English: “For it is this strong analogy, which forces all scientists and true judges to admit that these proud and vain beings, more distinguished by their pride, than by the name of Men, however much they may wish to elevate themselves, are at the end of the day only animals, and perpendicularly crawling machines.

2 For a clearer definition of what is meant by evolutionary success for the purposes of this argument, cf. Sternberg, 2019.

3 However, how about this philosophical or conceptual objection (credits go to a reviewer): Is “octopus” not a much broader term than “human”? Might a more reasonable comparison be ape? Or primate?

4 From this statement, it does not follow that these features would not apply to other animals as well. The message and take-away here is rather that even octopi can be regarded as social animals even though they might not appear to be social at first glance – unlike many other animals, particularly mammals which are often social in a direct sense, living in groups or taking care of their offspring, etc. (the latter might also clarify why mammals are called mammals).

5 More precisely, cephalopod brains are very different in structure compared to ours, but there do seem to be some analogous components. Cf. Shigeno et al. (2018).

6 Again, the bottom line is not that these would be special characteristics in the animal kingdom (my dog, an Australian shepherd, is surely also curious and flexible) and that these would not apply to other species as well. Instead, the lesson to be learned is that even animals like octopi which have relatively little in common with homo sapiens – recall that our most recent common ancestor lived 600 million years ago – can exhibit forms of enhanced intelligence which less than a hundred years ago were only associated with human beings, other apes, and perhaps a few more mammal species. In order to fully understand/appreciate non-human intelligence, we must abandon an anthropocentric view since in the light of octopi sharing so little with us, it is simply not justified.

7 Nota bene though that the modern view of life does not rank species/lineages/orders in terms of simple or more complex.

8 Strictly speaking and in light of Kautz’s (2020) taxonomy for neurosymbolic AI, DeepMind’s AlphaGo does not count as a standard deep learning program (Type 1), but as a Type 2 hybrid system where the core neural network is loosely-coupled with a symbolic problem solver such as, in this case, Monte Carlo tree search.

9 Looking at the recent developments in the vehicle automation technology, connected and autonomous cars do not seem to be too far away. The first Level-3 autonomous car hit the roads in 2018 and several OEMs claim Level-5 autonomy to become a reality in 5 to 20 years (Hoffmann & Dahlinger, 2019). But perhaps, it will take longer especially if the rule of thumb holds that the first 80% (i.e., in this case, achieving level 1 to 4) are “easily” reached and the remaining 20% invoke severe problems.

10 Despite all the technological progress, the field of AI has usually evaluated its artifacts in terms of task performance, not really in terms of intelligence; and if the term “intelligence” is not just of anecdotal value, but comes with some actual bearing, then it is reduced to task performance like in the case of Newell & Simon (1976/1997: 83): “For all information is processed by computers in the service of ends, and we measure the intelligence of a system by its ability to achieve stated ends in the face of variations, difficulties, and complexities posed by the task environment”, complemented by (ibid. 97): “[…] it is natural that much of the history of artificial intelligence is taken up with attempts to build and understand problem-solving systems”.

11 Scientists have developed AI tools to aid screening tests for several kinds of cancer, including breast cancer. AI-based computer programs have been used to help doctors interpret mammograms for more than 20 years.

12 Or semi-formally: X is autonomous to a degree y in respect to an action H if, and only if, X is independent of any other agent’s Z = {z1, z2, …} influence to the extent y, as far as the mechanism yielding the action H is concerned. Whether the fact that, for instance, some AI systems (like in vehicles for autonomous driving) rely on randomness (or pseudo-randomness) affects the AI system’s (or, generally, X’s) autonomy is a question for another work. Cf. Hoffmann, 2022b.

References

Abramson, C., & Levin, M. 2021. Behaviorist approaches to investigating memory and learning: A primer for synthetic biology and bioengineering. Communicative & Integrative Biology, 14: 230–247.

Abramson, C., & Wells, H. 2018. An Inconvenient Truth: Some Neglected Issues in Invertebrate Learning. Perspectives on Behavior Science, 41: 395–416.

Abramson, C., Dinges, C.W., & Wells, H. 2016. Operant Conditioning in Honey Bees (Apis mellifera L.): The Cap Pushing Response. PLoS ONE, 11: e0162347.

Abramson, C. 2013. Problems of Teaching the Behaviorist Perspective in the Cognitive Revolution. Behavioral Sciences, 3: 55–71.

Agrawal, A., Gans, J., & Goldfarb, A. 2018. Prediction Machines: The simple economics of Artificial Intelligence. Boston: Harvard Business Review Press.

Amodio, P., Boeckle, M., Schnell, A.K., Ostojíc, L., Fiorito, G., & Clayton, N.S. 2019. Grow Smart and Die Young: Why Did Cephalopods Evolve Intelligence? Trends in Ecology & Evolution, 34: 45–56.

Anderson, R.C., & Mather, J.A. 2010. It’s all in the cues: Octopuses (Enteroctopus dofleini) learn to open jars. Ferrantia, 59, 8–13.

Block, N. 1981. Psychologism and behaviorism. Philosophical Review, 90: 5–43.

Boal, J.G., Dunham, A.W., Williams, K.T., & Hanlon, R.T. 2000. Experimental evidence for spatial learning in octopuses (Octopus bimaculoides). Journal of Comparative Psychology, 114, 246–252.

Bradley, E.A. 1974. Some observations of Octopus joubini reared in an inland aquarium. Journal of Zoology, 173: 355–368.

Browning, H. 2019. What is good for an octopus?. Animal Sentience, 26. Available at: http://philarchive.org/archive/BROWIG-4 (09-06-22).

Clark, A. 2016. Surfing Uncertainty: Prediction, Action, and the Embodied Mind. Oxford: Oxford University Press.

Diamond, J. 1992. The Third Chimpanzee: The Evolution and Future of the Human Animal. New York: HarperCollins Publishers.

Dunbar, R.I.M. 1998. The social brain hypothesis. Evolutionary Anthropology, 6: 178–190.

Finn, J.K., Tregenza, T., & Norman, M.D. 2009. Defensive tool use in a coconut-carrying octopus. Current Biology, 19: R1069–R1070.

Fiorito, G., Biederman, G.B., Davey, V.A., & Gherardi, F. 1998. The role of stimulus preexposure in problem solving by Octopus vulgaris. Animal Cognition, 1: 107–112.

Fiorito, G., Von Planta, C., & Scotto, P. Problem solving ability of Octopus vulgaris lamarck (Mollusca, Cephalopoda). Behavioral and Neural Biology, 53: 217–230.

Fox, K.C.R., Muthukrishna, M., & Shultz, S. 2017. The social and cultural roots of whale and dolphin brains. Nature Ecology & Evolution, 1: 1699–1705.

Godfrey-Smith, P. 2018. Other Minds: The Octopus and the Evolution of Intelligent Life. London: WilliamCollins.

Godfrey-Smith, P. 2017. The Mind of an Octopus. Eight smart limbs plus a big brain add up to a weird and wondrous kind of intelligence. Scientific American. Available at: http://www.scientificamerican.com/article/the-mind-of-an-octopus/ (04-12-21).

Godfrey-Smith, P. 2013. Cephalopods and the evolution of the mind. Pacific Conservation Biology, 19: 4–9.

Hanlon, R.T., & Messenger, J.B. 2018. Cephalopod Behaviour. Cambridge: Cambridge University Press.

Haugeland, J. 1997. What is Mind design? In. J. Haugeland (Ed.). Mind Design II: Philosophy, Psychology, and Artificial Intelligence. Cambridge, MA: Bradford Books: 1–28.

Hoffmann, C.H. 2022a. The Quest for a Universal Theory of Intelligence: The Mind, the Machine, and Singularity Hypotheses. Berlin: De Gruyter.

Hoffmann, C.H. 2022b. Nietzschean perspectives on intelligence: In need of more plurality for making sense of intelligence. Journal of Artificial Intelligence Humanities, 10: 9–52.

Hoffmann, C.H., & Dahlinger, A. 2019. How capitalism abolishes itself in the digital era in favour of robo-economic systems: socio-economic implications of decentralized autonomous self-owned businesses. Foresight, 22: 53–67.

Hoffmann, C.H. 2018. Die Kunst der Vorhersage. Buchbesprechung “Prediction machines. The simple economics of Artificial Intelligence.” Springer Spektrum. Available at: http://www.spektrum.de/rezension/buchkritik-zu-prediction-machines/1603000 (07-11-2021).

Huebner, B. 2018. Kinds of Collective Behavior and the Possibility of Group Minds. In. K. Andrews & J. Beck (Eds.). The Routledge Handbook of Philosophy of Animal Minds. New York: Routledge: 390–397.

Humphrey, N. 1976. The Social Function of Intellect. In. P.P.G. Bateson, & R.A. Hinde. (Eds.). Growing Points in Ethology. Cambridge: Cambridge University Press: 303–317.

Jelbert, S.A., Miller, R., Schiesti, M., Boeckle, M., Cheke, L., Gray, R., Taylor, A., & Clayton, N. 2019. New Caledonian crows infer the weight of objects from observing their movements in a breeze. Proceedings of the Royal Society B: Biological Sciences, 286: 20182332.

Jelbert, S.A., Taylor, A.H., Cheke, L.G., Clayton, N.S., & Gray, R.D. 2014. Using the Aesop’s fable paradigm to investigate causal understanding of water displacement by new Caledonian crows. PloS ONE, 9: e92895.

Jensen, A. 1998. The g factor: the science of mental ability, Psycoloquy. Am. Psychol. Assn. Available at: http://psychprints.ecs.soton.ac.uk/archive/00000658/.

Kautz, H. 2020. The Third AI Summer, AAAI Robert S. Engelmore Memorial Lecture, Thirty-fourth AAAI Conference on Artificial Intelligence. New York, NY, February 10, 2020. http://www.cs.rochester.edu/u/kautz/talks/index.html. (12-01-22).

Köhler, W. 1925/1976. The Mentality of Apes. New York: Liveright Publishing.

Kurzweil, R. 2006. The Singularity Is Near: When Humans Transcend Biology. New York: Penguin USA.

La Mettrie, J.O. 1748/1990. Die Maschine Mensch: L’homme machine. Französisch-Deutsch. Ed. and transl. by C. Becker. Hamburg: Meiner.

Levesque, H.J. 2018. Common Sense, The Turing Test, and the Quest for Real AI. Cambridge, MA: MIT Press.

Matthen, M. 2018. Novel Colours in Animal Perception. In. K. Andrews & J. Beck (Eds.). The Routledge Handbook of Philosophy of Animal Minds. New York City, NY: Routledge: 65–75.

Montgomery, S. 2016. The Soul of an Octopus: A Surprising Exploration into the Wonder of Consciousness. New York City: Simon & Schuster.

Nakamoto, S. 2008. Bitcoin: A peer-to-peer electronic cash system. Available at: http://bitcoin.org/bitcoin.pdf (09-06-22).

Newell, A., & Simon, H.A. 1976/1997. Computer science as empirical inquiry: Symbols and search. In. J. Haugeland (Ed.). Mind Design II: Philosophy, Psychology, and Artificial Intelligence. Cambridge, MA: Bradford Books: 81–110.

Nilsson, N. (Ed.). 1984. Shakey The Robot. Technical Note 323. Menlo Park, CA: AI Center, SRI International.

Noad, M., Cato, D., Bryden, M., Jenner, M.-N., & Jenner, K.C.S. 2000. Cultural revolution in whale songs. Nature, 408: 537.

Olkowicz, S., Kocourek, M., Lučan, R.K., Porteš, M., Fitch, W.T., Herculano-Houzel, S., & Němeca, P. 2016. Birds have primate-like numbers of neurons in the forebrain. Proceedings of the National Academy of Sciences of the United States of America, 113: 7255–7260.

Rajani, S. 2011. Artificial Intelligence – man or machine. International Journal of Information Technology, 4: 173–176.

Richter, J.N., Hochner, B., & Kuba, M.J. 2016. Pull or Push? Octopuses Solve a Puzzle Problem. PLoS ONE, 11: e0152048.

Robinson, A. 2020. The code-breakers who led the rise of computing. Book review. Nature, 586: 492–493.

Scales, H. 2020. How many hearts does an octopus have? BBC Science Focus Magazine. Available at: http://www.sciencefocus.com/nature/why-does-an-octopus-have-more-than-one-heart/ (04-12-21).

Sebeok, T. (Ed.). 1981. The Clever Hans Phenomenon: Communication with Horses, Whales, Apes, and People. Annals of the New York Academy of Sciences, 364: vii-viii, 1–309.

Semmens, J.M., Pecl, G.T., Villanueva, R., Jouffre, D., Sobrino, I., Wood, J.B., & Rigby, P.R. 2004. Understanding octopus growth: patterns, variability and physiology. Marine and Freshwater Research, 55: 367–377.

Shigeno, S., Andrews, P., Ponte, G., & Fiorito, G. 2018. Cephalopod Brains: An Overview of Current Knowledge to Facilitate Comparison With Vertebrates. Frontiers in Physiology, 9: 952.

Silver, D., Schrittwieser, J., Simonyan, K., & Antonoglou, I. 2017. Mastering the game of Go without human knowledge. Nature, 550: 354–359.

Silver, D., & Hassabis, D. 2016. AlphaGo: Mastering the ancient game of Go with Machine Learning. Google AI Blog. Available at: http://ai.googleblog.com/2016/01/alphago-mastering-ancient-game-of-go.html (15-03-2022).

Staddon, J.E.R. 1983. Adaptive Behavior and Learning. Cambridge: Cambridge University Press.

Sternberg, R.J. 2019. A Theory of Adaptive Intelligence and Its Relation to General Intelligence. Journal of Intelligence, 23: 7040023.

Tegmark, M. 2018. Life 3.0: Being Human in the Age of Artificial Intelligence. London: Penguin.

The Guardian, 2022. Octopi were around before dinosaurs, fossil find suggests. Available at: http://www.theguardian.com/science/2022/mar/08/octopi-were-around-before-dinosaurs-fossil-find-suggests (09-06-22).

Winograd, T. 1972. Understanding Natural Language. San Diego: Academic Press.